Reinforcment Increases the Likelihood a Behavior Will Occur Again

Bones Principles of Operant Conditioning: Thorndike'due south Constabulary of Issue

Thorndike'due south law of effect states that behaviors are modified by their positive or negative consequences.

Learning Objectives

Relate Thorndike's law of outcome to the principles of operant workout

Cardinal Takeaways

Cardinal Points

- The law of effect states that responses that produce a satisfying effect in a detail situation become more probable to occur again, while responses that produce a discomforting event are less likely to be repeated.

- Edward L. Thorndike commencement studied the law of effect by placing hungry cats within puzzle boxes and observing their actions. He quickly realized that cats could acquire the efficacy of certain behaviors and would repeat those behaviors that immune them to escape faster.

- The law of effect is at work in every man behavior as well. From a immature age, we learn which actions are beneficial and which are detrimental through a similar trial and mistake procedure.

- While the constabulary of effect explains beliefs from an external, observable bespeak of view, information technology does not business relationship for internal, unobservable processes that likewise affect the beliefs patterns of human beings.

Key Terms

- Law of Effect: A police force developed by Edward L. Thorndike that states, "responses that produce a satisfying effect in a item situation go more than likely to occur again in that state of affairs, and responses that produce a discomforting upshot become less likely to occur again in that state of affairs."

- behavior modification: The act of altering actions and reactions to stimuli through positive and negative reinforcement or punishment.

- trial and fault: The procedure of finding a solution to a problem past trying many possible solutions and learning from mistakes until a way is found.

Operant workout is a theory of learning that focuses on changes in an individual's observable behaviors. In operant conditioning, new or connected behaviors are impacted by new or continued consequences. Research regarding this principle of learning first began in the late 19th century with Edward L. Thorndike, who established the law of upshot.

Thorndike'due south Experiments

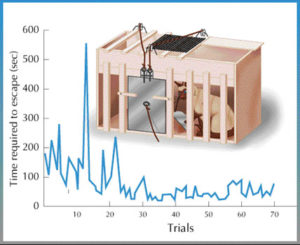

Thorndike'due south most famous work involved cats trying to navigate through diverse puzzle boxes. In this experiment, he placed hungry cats into homemade boxes and recorded the time it took for them to perform the necessary actions to escape and receive their food advantage. Thorndike discovered that with successive trials, cats would acquire from previous behavior, limit ineffective actions, and escape from the box more quickly. He observed that the cats seemed to learn, from an intricate trial and error process, which actions should be continued and which actions should be abandoned; a well-practiced cat could quickly call up and reuse actions that were successful in escaping to the food reward.

Thorndike'southward puzzle box: This image shows an instance of Thorndike's puzzle box alongside a graph demonstrating the learning of a cat within the box. Equally the number of trials increased, the cats were able to escape more than quickly by learning.

Thorndike'southward puzzle box: This image shows an instance of Thorndike's puzzle box alongside a graph demonstrating the learning of a cat within the box. Equally the number of trials increased, the cats were able to escape more than quickly by learning.

The Constabulary of Effect

Thorndike realized non simply that stimuli and responses were associated, but besides that behavior could be modified by consequences. He used these findings to publish his now famous "law of result" theory. Co-ordinate to the police of effect, behaviors that are followed past consequences that are satisfying to the organism are more than probable to be repeated, and behaviors that are followed by unpleasant consequences are less probable to be repeated. Essentially, if an organism does something that brings about a desired event, the organism is more likely to exercise it once more. If an organism does something that does not bring almost a desired result, the organism is less likely to practise it again.

Police force of effect: Initially, cats displayed a multifariousness of behaviors inside the box. Over successive trials, actions that were helpful in escaping the box and receiving the food reward were replicated and repeated at a higher rate.

Thorndike's law of effect now informs much of what nosotros know about operant conditioning and behaviorism. Co-ordinate to this law, behaviors are modified by their consequences, and this basic stimulus-response relationship tin can be learned by the operant person or animal. One time the association between behavior and consequences is established, the response is reinforced, and the association holds the sole responsibleness for the occurrence of that behavior. Thorndike posited that learning was merely a change in behavior as a result of a upshot, and that if an action brought a reward, it was stamped into the mind and bachelor for remember later.

From a young age, we larn which actions are beneficial and which are detrimental through a trial and error process. For instance, a immature child is playing with her friend on the playground and playfully pushes her friend off the swingset. Her friend falls to the ground and begins to cry, then refuses to play with her for the rest of the 24-hour interval. The child's actions (pushing her friend) are informed by their consequences (her friend refusing to play with her), and she learns not to repeat that activity if she wants to continue playing with her friend.

The constabulary of consequence has been expanded to various forms of behavior modification. Because the law of effect is a key component of behaviorism, it does not include whatsoever reference to unobservable or internal states; instead, it relies solely on what tin be observed in human behavior. While this theory does not account for the entirety of human beliefs, information technology has been applied to nearly every sector of human life, but peculiarly in educational activity and psychology.

Basic Principles of Operant Conditioning: Skinner

B. F. Skinner was a behavioral psychologist who expanded the field by defining and elaborating on operant workout.

Learning Objectives

Summarize Skinner's enquiry on operant conditioning

Key Takeaways

Key Points

- B. F. Skinner, a behavioral psychologist and a student of E. L. Thorndike, contributed to our view of learning by expanding our understanding of conditioning to include operant workout.

- Skinner theorized that if a behavior is followed by reinforcement, that behavior is more likely to exist repeated, but if it is followed by punishment, it is less probable to exist repeated.

- Skinner conducted his enquiry on rats and pigeons by presenting them with positive reinforcement, negative reinforcement, or punishment in various schedules that were designed to produce or inhibit specific target behaviors.

- Skinner did non include room in his enquiry for ideas such as free will or individual choice; instead, he posited that all beliefs could exist explained using learned, physical aspects of the earth, including life history and evolution.

Cardinal Terms

- punishment: The human activity or process of imposing and/or applying a sanction for an undesired beliefs when conditioning toward a desired behavior.

- aversive: Disposed to repel, causing abstention (of a state of affairs, a behavior, an detail, etc.).

- superstition: A belief, not based on reason or scientific knowledge, that future events may be influenced past one'south beliefs in some magical or mystical way.

Operant workout is a theory of behaviorism that focuses on changes in an individual'south observable behaviors. In operant conditioning, new or connected behaviors are impacted by new or connected consequences. Inquiry regarding this principle of learning was showtime conducted by Edward Fifty. Thorndike in the late 1800s, then brought to popularity by B. F. Skinner in the mid-1900s. Much of this research informs current practices in human behavior and interaction.

Skinner'south Theories of Operant Conditioning

Virtually half a century later on Thorndike's showtime publication of the principles of operant conditioning and the law of effect, Skinner attempted to evidence an extension to this theory—that all behaviors are in some way a result of operant conditioning. Skinner theorized that if a behavior is followed by reinforcement, that behavior is more likely to be repeated, but if it is followed by some sort of aversive stimuli or punishment, it is less probable to be repeated. He too believed that this learned clan could end, or become extinct, if the reinforcement or punishment was removed.

B. F. Skinner: Skinner was responsible for defining the segment of behaviorism known as operant conditioning—a process past which an organism learns from its physical environs.

Skinner'south Experiments

Skinner'southward most famous enquiry studies were simple reinforcement experiments conducted on lab rats and domestic pigeons, which demonstrated the most basic principles of operant conditioning. He conducted most of his research in a special cumulative recorder, at present referred to equally a "Skinner box," which was used to clarify the behavioral responses of his test subjects. In these boxes he would present his subjects with positive reinforcement, negative reinforcement, or aversive stimuli in diverse timing intervals (or "schedules") that were designed to produce or inhibit specific target behaviors.

In his get-go work with rats, Skinner would place the rats in a Skinner box with a lever attached to a feeding tube. Whenever a rat pressed the lever, nutrient would be released. After the experience of multiple trials, the rats learned the association between the lever and food and began to spend more of their time in the box procuring food than performing any other activity. It was through this early work that Skinner started to understand the effects of behavioral contingencies on actions. He discovered that the rate of response—as well as changes in response features—depended on what occurred later the behavior was performed, not before. Skinner named these actions operant behaviors because they operated on the environment to produce an result. The process by which one could arrange the contingencies of reinforcement responsible for producing a certain behavior so came to exist called operant conditioning.

To bear witness his idea that behaviorism was responsible for all actions, he later created a "superstitious pigeon." He fed the pigeon on continuous intervals (every fifteen seconds) and observed the pigeon'southward beliefs. He institute that the pigeon's deportment would change depending on what information technology had been doing in the moments before the food was dispensed, regardless of the fact that those actions had nothing to do with the dispensing of food. In this way, he discerned that the pigeon had fabricated a causal human relationship between its actions and the presentation of advantage. It was this development of "superstition" that led Skinner to believe all behavior could be explained every bit a learned reaction to specific consequences.

In his operant workout experiments, Skinner ofttimes used an approach called shaping. Instead of rewarding only the target, or desired, beliefs, the procedure of shaping involves the reinforcement of successive approximations of the target behavior. Behavioral approximations are behaviors that, over time, grow increasingly closer to the actual desired response.

Skinner believed that all behavior is predetermined by by and present events in the objective globe. He did not include room in his research for ideas such as complimentary will or individual pick; instead, he posited that all behavior could be explained using learned, physical aspects of the globe, including life history and evolution. His piece of work remains extremely influential in the fields of psychology, behaviorism, and education.

Shaping

Shaping is a method of operant conditioning by which successive approximations of a target behavior are reinforced.

Learning Objectives

Describe how shaping is used to modify beliefs

Key Takeaways

Key Points

- B. F. Skinner used shaping —a method of grooming by which successive approximations toward a target behavior are reinforced—to test his theories of behavioral psychology.

- Shaping involves a calculated reinforcement of a "target behavior": it uses operant conditioning principles to train a subject by rewarding proper behavior and discouraging improper beliefs.

- The method requires that the subject perform behaviors that at first simply resemble the target behavior; through reinforcement, these behaviors are gradually changed or "shaped" to encourage the target behavior itself.

- Skinner'southward early experiments in operant conditioning involved the shaping of rats' behavior so they learned to printing a lever and receive a food reward.

- Shaping is commonly used to train animals, such as dogs, to perform difficult tasks; it is besides a useful learning tool for modifying human behavior.

Central Terms

- successive approximation: An increasingly authentic gauge of a response desired by a trainer.

- paradigm: An example serving as a model or design; a template, every bit for an experiment.

- shaping: A method of positive reinforcement of behavior patterns in operant workout.

In his operant-conditioning experiments, Skinner frequently used an approach chosen shaping. Instead of rewarding only the target, or desired, beliefs, the process of shaping involves the reinforcement of successive approximations of the target beliefs. The method requires that the subject perform behaviors that at showtime merely resemble the target behavior; through reinforcement, these behaviors are gradually changed, or shaped, to encourage the performance of the target beliefs itself. Shaping is useful because information technology is often unlikely that an organism volition brandish anything but the simplest of behaviors spontaneously. It is a very useful tool for preparation animals, such as dogs, to perform difficult tasks.

Dog show: Dog training often uses the shaping method of operant conditioning.

How Shaping Works

In shaping, behaviors are broken down into many small, doable steps. To examination this method, B. F. Skinner performed shaping experiments on rats, which he placed in an appliance (known as a Skinner box) that monitored their behaviors. The target behavior for the rat was to press a lever that would release food. Initially, rewards are given for even crude approximations of the target behavior—in other words, even taking a step in the right direction. Then, the trainer rewards a behavior that is 1 step closer, or ane successive approximation nearer, to the target behavior. For example, Skinner would reward the rat for taking a step toward the lever, for continuing on its hind legs, and for touching the lever—all of which were successive approximations toward the target behavior of pressing the lever.

As the subject moves through each beliefs trial, rewards for old, less gauge behaviors are discontinued in order to encourage progress toward the desired beliefs. For example, in one case the rat had touched the lever, Skinner might end rewarding it for just taking a stride toward the lever. In Skinner'south experiment, each reward led the rat closer to the target behavior, finally culminating in the rat pressing the lever and receiving food. In this style, shaping uses operant-workout principles to railroad train a subject by rewarding proper behavior and discouraging improper behavior.

In summary, the procedure of shaping includes the following steps:

- Reinforce whatever response that resembles the target behavior.

- Then reinforce the response that more closely resembles the target behavior. Y'all will no longer reinforce the previously reinforced response.

- Next, begin to reinforce the response that even more closely resembles the target behavior. Keep to reinforce closer and closer approximations of the target behavior.

- Finally, only reinforce the target behavior.

Applications of Shaping

This procedure has been replicated with other animals—including humans—and is now common practice in many training and teaching methods. Information technology is commonly used to train dogs to follow verbal commands or become house-broken: while puppies can rarely perform the target behavior automatically, they tin be shaped toward this behavior by successively rewarding behaviors that come shut.

Shaping is also a useful technique in human learning. For example, if a male parent wants his daughter to learn to clean her room, he tin can use shaping to assist her master steps toward the goal. Start, she cleans upward one toy and is rewarded. Second, she cleans upward five toys; and then chooses whether to choice up ten toys or put her books and clothes away; then cleans up everything except 2 toys. Through a series of rewards, she finally learns to clean her entire room.

Reinforcement and Punishment

Reinforcement and punishment are principles of operant conditioning that increase or subtract the likelihood of a behavior.

Learning Objectives

Differentiate amidst chief, secondary, conditioned, and unconditioned reinforcers

Key Takeaways

Key Points

- " Reinforcement " refers to any consequence that increases the likelihood of a detail behavioral response; " punishment " refers to a effect that decreases the likelihood of this response.

- Both reinforcement and punishment can be positive or negative. In operant workout, positive means you lot are adding something and negative means you lot are taking something abroad.

- Reinforcers can be either primary (linked unconditionally to a behavior) or secondary (requiring deliberate or conditioned linkage to a specific behavior).

- Main—or unconditioned—reinforcers, such as h2o, nutrient, sleep, shelter, sex, affect, and pleasure, take innate reinforcing qualities.

- Secondary—or conditioned—reinforcers (such equally money) accept no inherent value until they are linked or paired with a primary reinforcer.

Key Terms

- latency: The delay betwixt a stimulus and the response it triggers in an organism.

Reinforcement and penalization are principles that are used in operant conditioning. Reinforcement means you are increasing a beliefs: it is whatever outcome or outcome that increases the likelihood of a particular behavioral response (and that therefore reinforces the beliefs). The strengthening upshot on the behavior can manifest in multiple means, including higher frequency, longer duration, greater magnitude, and short latency of response. Penalty ways you are decreasing a behavior: it is any outcome or upshot that decreases the likelihood of a behavioral response.

Extinction , in operant conditioning, refers to when a reinforced behavior is extinguished entirely. This occurs at some point after reinforcement stops; the speed at which this happens depends on the reinforcement schedule, which is discussed in more detail in another section.

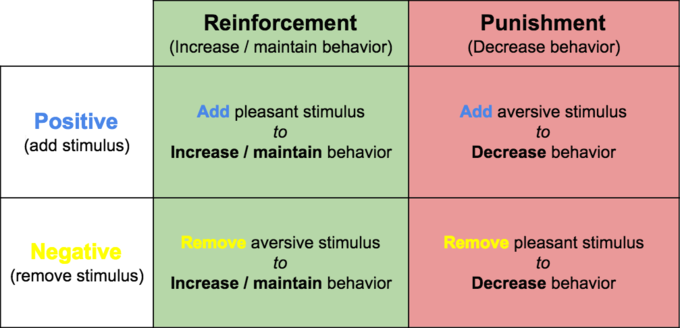

Positive and Negative Reinforcement and Punishment

Both reinforcement and punishment can be positive or negative. In operant workout, positive and negative exercise not mean skilful and bad. Instead, positive means you are calculation something and negative ways you are taking something abroad. All of these methods can manipulate the beliefs of a field of study, only each works in a unique fashion.

Operant conditioning: In the context of operant workout, whether you are reinforcing or punishing a behavior, "positive" always ways you are adding a stimulus (non necessarily a practiced ane), and "negative" always means you lot are removing a stimulus (not necessarily a bad one. Come across the blue text and xanthous text above, which stand for positive and negative, respectively. Similarly, reinforcement always means you are increasing (or maintaining) the level of a beliefs, and penalty always ways you are decreasing the level of a beliefs. See the light-green and red backgrounds above, which represent reinforcement and punishment, respectively.

- Positive reinforcers add a wanted or pleasant stimulus to increase or maintain the frequency of a behavior. For instance, a kid cleans her room and is rewarded with a cookie.

- Negative reinforcers remove an aversive or unpleasant stimulus to increment or maintain the frequency of a behavior. For example, a child cleans her room and is rewarded by not having to wash the dishes that night.

- Positive punishments add an aversive stimulus to decrease a beliefs or response. For example, a kid refuses to clean her room and and then her parents make her wash the dishes for a week.

- Negative punishments remove a pleasant stimulus to decrease a behavior or response. For case, a kid refuses to clean her room and so her parents refuse to let her play with her friend that afternoon.

Main and Secondary Reinforcers

The stimulus used to reinforce a certain behavior tin can be either primary or secondary. A chief reinforcer, also called an unconditioned reinforcer, is a stimulus that has innate reinforcing qualities. These kinds of reinforcers are not learned. Water, food, sleep, shelter, sex, touch on, and pleasance are all examples of principal reinforcers: organisms exercise non lose their drive for these things. Some master reinforcers, such as drugs and alcohol, merely mimic the effects of other reinforcers. For about people, jumping into a cool lake on a very hot mean solar day would be reinforcing and the cool lake would exist innately reinforcing—the water would cool the person off (a physical need), as well as provide pleasure.

A secondary reinforcer, likewise called a conditioned reinforcer, has no inherent value and only has reinforcing qualities when linked or paired with a primary reinforcer. Before pairing, the secondary reinforcer has no meaningful effect on a subject. Money is one of the best examples of a secondary reinforcer: it is only worth something considering you tin can use information technology to buy other things—either things that satisfy basic needs (food, water, shelter—all primary reinforcers) or other secondary reinforcers.

Schedules of Reinforcement

Reinforcement schedules make up one's mind how and when a behavior will be followed by a reinforcer.

Learning Objectives

Compare and contrast different types of reinforcement schedules

Key Takeaways

Key Points

- A reinforcement schedule is a tool in operant conditioning that allows the trainer to control the timing and frequency of reinforcement in lodge to elicit a target beliefs.

- Continuous schedules advantage a behavior after every performance of the desired behavior; intermittent (or partial) schedules only reward the beliefs after certain ratios or intervals of responses.

- Intermittent schedules can be either stock-still (where reinforcement occurs after a gear up amount of fourth dimension or responses) or variable (where reinforcement occurs after a varied and unpredictable amount of time or responses).

- Intermittent schedules are too described as either interval (based on the time between reinforcements) or ratio (based on the number of responses).

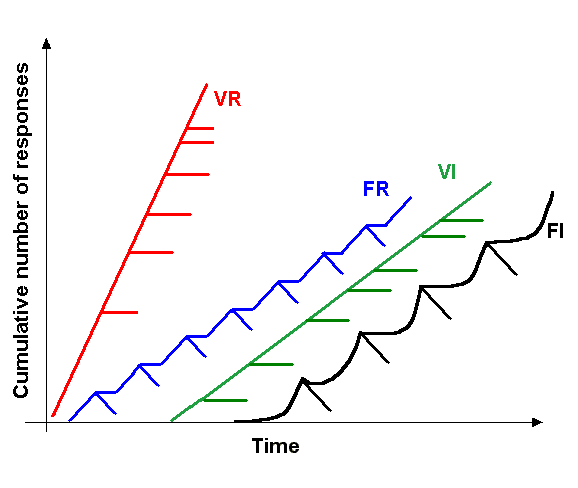

- Different schedules (fixed-interval, variable-interval, fixed-ratio, and variable-ratio) have different advantages and answer differently to extinction.

- Compound reinforcement schedules combine two or more unproblematic schedules, using the same reinforcer and focusing on the same target behavior.

Key Terms

- extinction: When a beliefs ceases because it is no longer reinforced.

- interval: A period of time.

- ratio: A number representing a comparing between ii things.

A schedule of reinforcement is a tactic used in operant conditioning that influences how an operant response is learned and maintained. Each blazon of schedule imposes a rule or plan that attempts to determine how and when a desired behavior occurs. Behaviors are encouraged through the use of reinforcers, discouraged through the use of punishments, and rendered extinct by the complete removal of a stimulus. Schedules vary from simple ratio- and interval-based schedules to more complicated chemical compound schedules that combine i or more uncomplicated strategies to manipulate beliefs.

Continuous vs. Intermittent Schedules

Continuous schedules reward a beliefs later every performance of the desired beliefs. This reinforcement schedule is the quickest way to teach someone a behavior, and information technology is specially constructive in didactics a new behavior. Uncomplicated intermittent (sometimes referred to as fractional) schedules, on the other hand, only reward the behavior after certain ratios or intervals of responses.

Types of Intermittent Schedules

In that location are several different types of intermittent reinforcement schedules. These schedules are described every bit either fixed or variable and as either interval or ratio.

Fixed vs. Variable, Ratio vs. Interval

Fixed refers to when the number of responses between reinforcements, or the corporeality of fourth dimension between reinforcements, is set and unchanging. Variable refers to when the number of responses or corporeality of time between reinforcements varies or changes. Interval means the schedule is based on the time between reinforcements, and ratio ways the schedule is based on the number of responses between reinforcements. Simple intermittent schedules are a combination of these terms, creating the following iv types of schedules:

- A fixed-interval schedule is when behavior is rewarded subsequently a gear up amount of fourth dimension. This blazon of schedule exists in payment systems when someone is paid hourly: no thing how much work that person does in one 60 minutes (behavior), they will exist paid the same amount (reinforcement).

- With a variable-interval schedule, the subject area gets the reinforcement based on varying and unpredictable amounts of time. People who like to fish experience this type of reinforcement schedule: on boilerplate, in the aforementioned location, you are probable to catch nigh the same number of fish in a given fourth dimension period. However, you do non know exactly when those catches will occur (reinforcement) within the time period spent fishing (behavior).

- With a fixed-ratio schedule, there are a set number of responses that must occur before the behavior is rewarded. This can exist seen in payment for work such as fruit picking: pickers are paid a certain amount (reinforcement) based on the amount they pick (behavior), which encourages them to pick faster in gild to brand more money. In some other example, Carla earns a committee for every pair of glasses she sells at an eyeglass store. The quality of what Carla sells does not affair because her commission is not based on quality; it's only based on the number of pairs sold. This distinction in the quality of performance tin aid decide which reinforcement method is about appropriate for a particular situation: fixed ratios are better suited to optimize the quantity of output, whereas a fixed interval can atomic number 82 to a higher quality of output.

- In a variable-ratio schedule, the number of responses needed for a reward varies. This is the virtually powerful type of intermittent reinforcement schedule. In humans, this type of schedule is used by casinos to concenter gamblers: a slot auto pays out an average win ratio—say five to one—merely does non guarantee that every 5th bet (behavior) will be rewarded (reinforcement) with a win.

All of these schedules take different advantages. In general, ratio schedules consistently elicit higher response rates than interval schedules because of their predictability. For example, if you are a factory worker who gets paid per particular that y'all manufacture, you will be motivated to industry these items apace and consistently. Variable schedules are categorically less-predictable and then they tend to resist extinction and encourage continued behavior. Both gamblers and fishermen akin can understand the feeling that 1 more pull on the slot-machine lever, or i more hour on the lake, will change their luck and arm-twist their respective rewards. Thus, they go along to gamble and fish, regardless of previously unsuccessful feedback.

Simple reinforcement-schedule responses: The four reinforcement schedules yield unlike response patterns. The variable-ratio schedule is unpredictable and yields high and steady response rates, with petty if any break afterward reinforcement (e.g., gambling). A stock-still-ratio schedule is predictable and produces a high response rate, with a short interruption later reinforcement (east.m., eyeglass sales). The variable-interval schedule is unpredictable and produces a moderate, steady response charge per unit (e.1000., angling). The fixed-interval schedule yields a scallop-shaped response design, reflecting a significant pause after reinforcement (e.k., hourly employment).

Extinction of a reinforced behavior occurs at some point subsequently reinforcement stops, and the speed at which this happens depends on the reinforcement schedule. Among the reinforcement schedules, variable-ratio is the most resistant to extinction, while fixed-interval is the easiest to extinguish.

Unproblematic vs. Compound Schedules

All of the examples described above are referred to as simple schedules. Compound schedules combine at least ii simple schedules and use the same reinforcer for the same behavior. Chemical compound schedules are ofttimes seen in the workplace: for case, if you are paid at an hourly rate (fixed-interval) but as well have an incentive to receive a small committee for certain sales (fixed-ratio), you are existence reinforced by a chemical compound schedule. Additionally, if there is an finish-of-year bonus given to just three employees based on a lottery organisation, you'd be motivated past a variable schedule.

There are many possibilities for compound schedules: for example, superimposed schedules use at to the lowest degree 2 uncomplicated schedules simultaneously. Concurrent schedules, on the other hand, provide two possible simple schedules simultaneously, but let the participant to respond on either schedule at volition. All combinations and kinds of reinforcement schedules are intended to elicit a specific target behavior.

Licenses and Attributions

CC licensed content, Shared previously

- Curation and Revision. Provided by: Boundless.com. License: CC BY-SA: Attribution-ShareAlike

CC licensed content, Specific attribution

- Learning Theorists. Provided by: Wikibooks. Located at: http://en.wikibooks.org/wiki/Learning_Theorists%23Edward_Thorndike. License: CC Past-SA: Attribution-ShareAlike

- Beast Behavior/Operant Conditioning. Provided by: Wikibooks. Located at: http://en.wikibooks.org/wiki/Animal_Behavior/Operant_Conditioning. License: CC BY-SA: Attribution-ShareAlike

- Constabulary of Effect. Provided by: Wikipedia. Located at: http://en.wikipedia.org/wiki/Law_of_Effect. License: CC BY-SA: Attribution-ShareAlike

- Boundless. Provided past: Boundless Learning. Located at: http://www.boundless.com//psychology/definition/behavior-modification. License: CC By-SA: Attribution-ShareAlike

- Law of Effect. Provided past: Wikipedia. Located at: http://en.wikipedia.org/wiki/Police force%20of%20Effect. License: CC BY-SA: Attribution-ShareAlike

- OpenStax College, Psychology. July 23, 2015. Provided by: OpenStax CNX. Located at: http://cnx.org/contents/[electronic mail protected]:35/Psychology. License: CC BY: Attribution

- trial and error. Provided by: Wiktionary. Located at: http://en.wiktionary.org/wiki/trial_and_error. License: CC BY-SA: Attribution-ShareAlike

- Provided by: Wikimedia. Located at: http://upload.wikimedia.org/wikipedia/commons/3/39/Puzzle_box.jpg. License: CC Past-SA: Attribution-ShareAlike

- Provided by: Wikimedia. Located at: http://upload.wikimedia.org/wikipedia/eatables/e/e2/Lawofeffect.gif. License: CC By-SA: Attribution-ShareAlike

- B.F. Skinner. Provided past: Wikipedia. Located at: http://en.wikipedia.org/wiki/B.F._Skinner. License: CC Past-SA: Attribution-ShareAlike

- B.F. Skinner. Provided by: Wikipedia. Located at: http://en.wikipedia.org/wiki/B.F._Skinner. License: CC By-SA: Attribution-ShareAlike

- Learning Theorists. Provided by: Wikibooks. Located at: http://en.wikibooks.org/wiki/Learning_Theorists%23B.F._Skinner. License: CC BY-SA: Attribution-ShareAlike

- Applied History of Psychology/Learning Theories. Provided by: Wikibooks. Located at: http://en.wikibooks.org/wiki/Applied_History_of_Psychology/Learning_Theories%23B.F.Skinner.281904-1990.29. License: CC BY-SA: Attribution-ShareAlike

- Brute Beliefs/Operant Conditioning. Provided past: Wikibooks. Located at: http://en.wikibooks.org/wiki/Animal_Behavior/Operant_Conditioning. License: CC By-SA: Attribution-ShareAlike

- superstition. Provided by: Wiktionary. Located at: http://en.wiktionary.org/wiki/superstition. License: CC Past-SA: Attribution-ShareAlike

- aversive. Provided by: Wiktionary. Located at: http://en.wiktionary.org/wiki/aversive. License: CC By-SA: Attribution-ShareAlike

- penalisation. Provided past: Wiktionary. Located at: http://en.wiktionary.org/wiki/punishment. License: CC By-SA: Attribution-ShareAlike

- OpenStax College, Psychology. July 23, 2015. Provided by: OpenStax CNX. Located at: http://cnx.org/contents/[electronic mail protected]:35/Psychology. License: CC BY: Attribution

- Provided by: Wikimedia. Located at: http://upload.wikimedia.org/wikipedia/commons/3/39/Puzzle_box.jpg. License: CC By-SA: Attribution-ShareAlike

- Provided by: Wikimedia. Located at: http://upload.wikimedia.org/wikipedia/eatables/east/e2/Lawofeffect.gif. License: CC By-SA: Attribution-ShareAlike

- Provided by: Wikimedia. Located at: http://upload.wikimedia.org/wikipedia/commons/3/3f/B.F._Skinner_at_Harvard_circa_1950.jpg. License: CC BY-SA: Attribution-ShareAlike

- Applied History of Psychology/Learning Theories. Provided by: Wikibooks. Located at: http://en.wikibooks.org/wiki/Applied_History_of_Psychology/Learning_Theories%23B.F.Skinner.281904-1990.29. License: CC BY-SA: Attribution-ShareAlike

- IB Psychology/Perspectives/Learning. Provided past: Wikibooks. Located at: http://en.wikibooks.org/wiki/IB_Psychology/Perspectives/Learning%23Operant_conditioning. License: CC BY-SA: Attribution-ShareAlike

- Animal Behavior/Learning. Provided past: Wikibooks. Located at: http://en.wikibooks.org/wiki/Animal_Behavior/Learning%23Operant_Conditioning. License: CC By-SA: Attribution-ShareAlike

- Shaping (psychology). Provided by: Wikipedia. Located at: http://en.wikipedia.org/wiki/Shaping_(psychology). License: CC BY-SA: Attribution-ShareAlike

- epitome. Provided by: Wiktionary. Located at: http://en.wiktionary.org/wiki/paradigm. License: CC Past-SA: Attribution-ShareAlike

- successive approximation. Provided by: Wikipedia. Located at: http://en.wikipedia.org/wiki/successive%20approximation. License: CC Past-SA: Attribution-ShareAlike

- OpenStax Higher, Psychology. July 24, 2015. Provided by: OpenStax CNX. Located at: http://cnx.org/contents/[email protected]:35/Psychology. License: CC BY: Attribution

- Provided by: Wikimedia. Located at: http://upload.wikimedia.org/wikipedia/eatables/3/39/Puzzle_box.jpg. License: CC BY-SA: Attribution-ShareAlike

- Provided past: Wikimedia. Located at: http://upload.wikimedia.org/wikipedia/commons/eastward/e2/Lawofeffect.gif. License: CC BY-SA: Attribution-ShareAlike

- Provided by: Wikimedia. Located at: http://upload.wikimedia.org/wikipedia/commons/3/3f/B.F._Skinner_at_Harvard_circa_1950.jpg. License: CC By-SA: Attribution-ShareAlike

- Correctional Services Canis familiaris Unit 01. Provided by: Wikimedia. Located at: http://commons.wikimedia.org/wiki/File:Correctional_Services_Dog_Unit_01.JPG. License: Public Domain: No Known Copyright

- Reinforcement. Provided past: Wikipedia. Located at: http://en.wikipedia.org/wiki/Reinforcement. License: CC By-SA: Attribution-ShareAlike

- Learning Theories/Behavioralist Theories. Provided by: Wikibooks. Located at: http://en.wikibooks.org/wiki/Learning_Theories/Behavioralist_Theories. License: CC Past-SA: Attribution-ShareAlike

- Applied History of Psychology/Learning Theories. Provided by: Wikibooks. Located at: http://en.wikibooks.org/wiki/Applied_History_of_Psychology/Learning_Theories%23B.F.Skinner.281904-1990.29. License: CC By-SA: Attribution-ShareAlike

- Animal Behavior/Learning. Provided by: Wikibooks. Located at: http://en.wikibooks.org/wiki/Animal_Behavior/Learning%23Operant_Conditioning. License: CC BY-SA: Attribution-ShareAlike

- latency. Provided by: Wiktionary. Located at: http://en.wiktionary.org/wiki/latency. License: CC Past-SA: Attribution-ShareAlike

- OpenStax Higher, Psychology. July 24, 2015. Provided past: OpenStax CNX. Located at: http://cnx.org/contents/[email protected]:35/Psychology. License: CC BY: Attribution

- Provided past: Wikimedia. Located at: http://upload.wikimedia.org/wikipedia/commons/3/39/Puzzle_box.jpg. License: CC BY-SA: Attribution-ShareAlike

- Provided by: Wikimedia. Located at: http://upload.wikimedia.org/wikipedia/commons/e/e2/Lawofeffect.gif. License: CC BY-SA: Attribution-ShareAlike

- Provided by: Wikimedia. Located at: http://upload.wikimedia.org/wikipedia/commons/three/3f/B.F._Skinner_at_Harvard_circa_1950.jpg. License: CC BY-SA: Attribution-ShareAlike

- Correctional Services Domestic dog Unit 01. Provided by: Wikimedia. Located at: http://eatables.wikimedia.org/wiki/File:Correctional_Services_Dog_Unit_01.JPG. License: Public Domain: No Known Copyright

- Original effigy by Catherine McCarthy. Licensed CC BY-SA 4.0. Provided past: Catherine McCarthy. License: CC BY-SA: Attribution-ShareAlike

- Practical History of Psychology/Learning Theories. Provided by: Wikibooks. Located at: http://en.wikibooks.org/wiki/Applied_History_of_Psychology/Learning_Theories%23B.F.Skinner.281904-1990.29. License: CC Past-SA: Attribution-ShareAlike

- UNIT half-dozen: LEARNING. Provided by: Saylor. Located at: http://www.saylor.org/site/wp-content/uploads/2011/01/TLBrink_PSYCH06.pdf. License: CC By: Attribution

- Reinforcement. Provided past: Wikipedia. Located at: http://en.wikipedia.org/wiki/Reinforcement. License: CC Past-SA: Attribution-ShareAlike

- ratio. Provided by: Wiktionary. Located at: http://en.wiktionary.org/wiki/ratio. License: CC Past-SA: Attribution-ShareAlike

- Boundless. Provided by: Dizzying Learning. Located at: http://world wide web.boundless.com//biology/definition/extinction. License: CC Past-SA: Attribution-ShareAlike

- interval. Provided past: Wiktionary. Located at: http://en.wiktionary.org/wiki/interval. License: CC Past-SA: Attribution-ShareAlike

- OpenStax College, Psychology. July 24, 2015. Provided by: OpenStax CNX. Located at: http://cnx.org/contents/[email protected]:35/Psychology. License: CC BY: Attribution

- Provided by: Wikimedia. Located at: http://upload.wikimedia.org/wikipedia/eatables/three/39/Puzzle_box.jpg. License: CC BY-SA: Attribution-ShareAlike

- Provided past: Wikimedia. Located at: http://upload.wikimedia.org/wikipedia/eatables/due east/e2/Lawofeffect.gif. License: CC BY-SA: Attribution-ShareAlike

- Provided past: Wikimedia. Located at: http://upload.wikimedia.org/wikipedia/commons/3/3f/B.F._Skinner_at_Harvard_circa_1950.jpg. License: CC By-SA: Attribution-ShareAlike

- Correctional Services Dog Unit 01. Provided past: Wikimedia. Located at: http://eatables.wikimedia.org/wiki/File:Correctional_Services_Dog_Unit_01.JPG. License: Public Domain: No Known Copyright

- Original figure past Catherine McCarthy. Licensed CC BY-SA iv.0. Provided by: Catherine McCarthy. License: CC Past-SA: Attribution-ShareAlike

- Provided by: Wikimedia. Located at: http://upload.wikimedia.org/wikipedia/commons/8/8f/Schedule_of_reinforcement.png. License: CC Past-SA: Attribution-ShareAlike

Source: https://courses.lumenlearning.com/boundless-psychology/chapter/operant-conditioning/

0 Response to "Reinforcment Increases the Likelihood a Behavior Will Occur Again"

Post a Comment